Anyone who’s a cyclist knows the that the Global Cycling Network (GCN) videos on YouTube are a tried and true classic resource for how-to videos on bike maintenance. They have great videos on how to change your bike chain, clean your bike, or change your pedals.

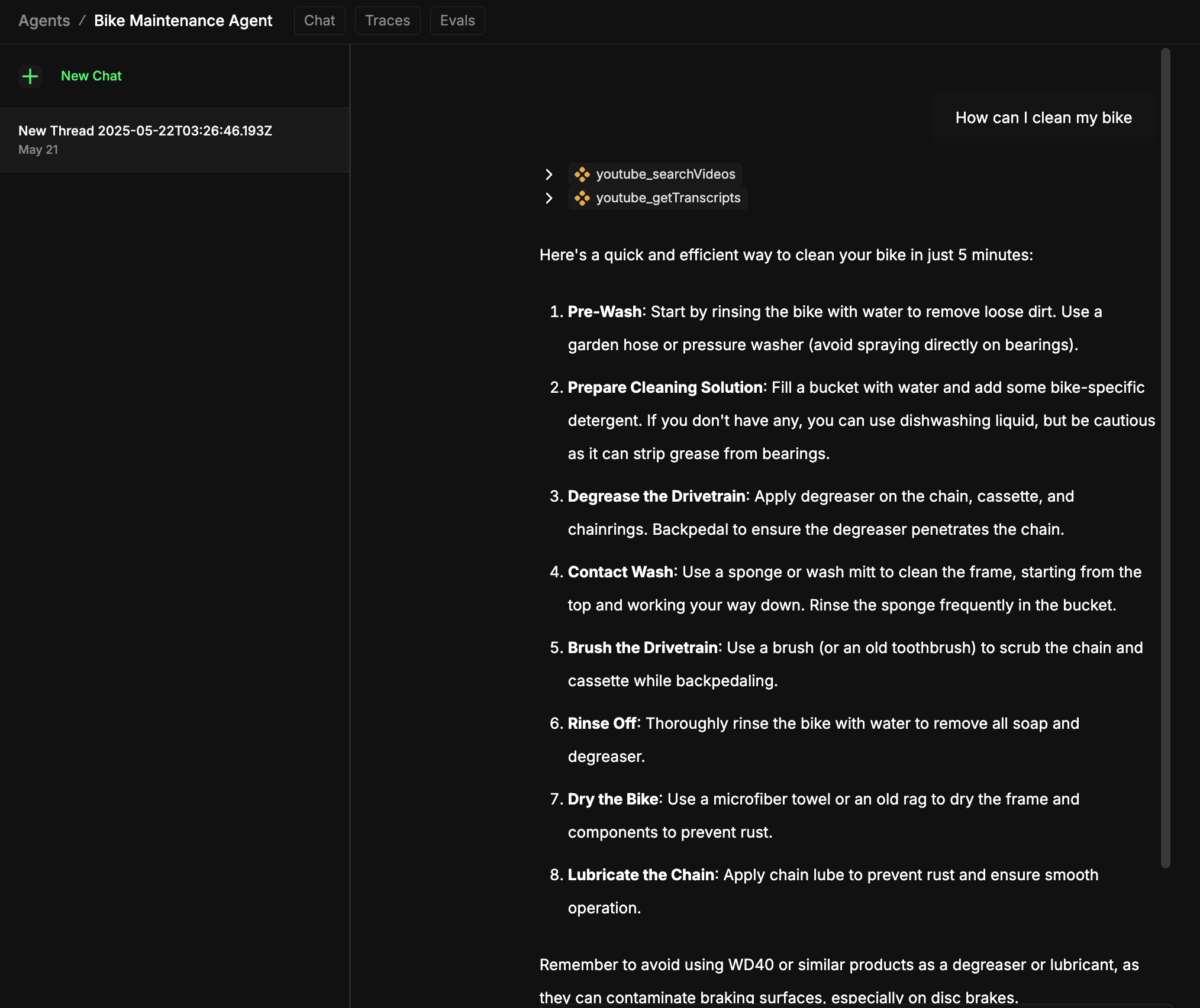

However scrolling through a video for just the few seconds you had a question about or trying to remember the steps outlined in a video is time consuming and challenging. AI is fantastic at text summarization so I had the idea to build an AI Agent using Mastra that scrapes YouTube for relevant GCN videos and then returns step by step instructions to the user as well as link to the original YouTube video. It was actually pretty helpful and I used it to learn how to clean my bike last weekend.

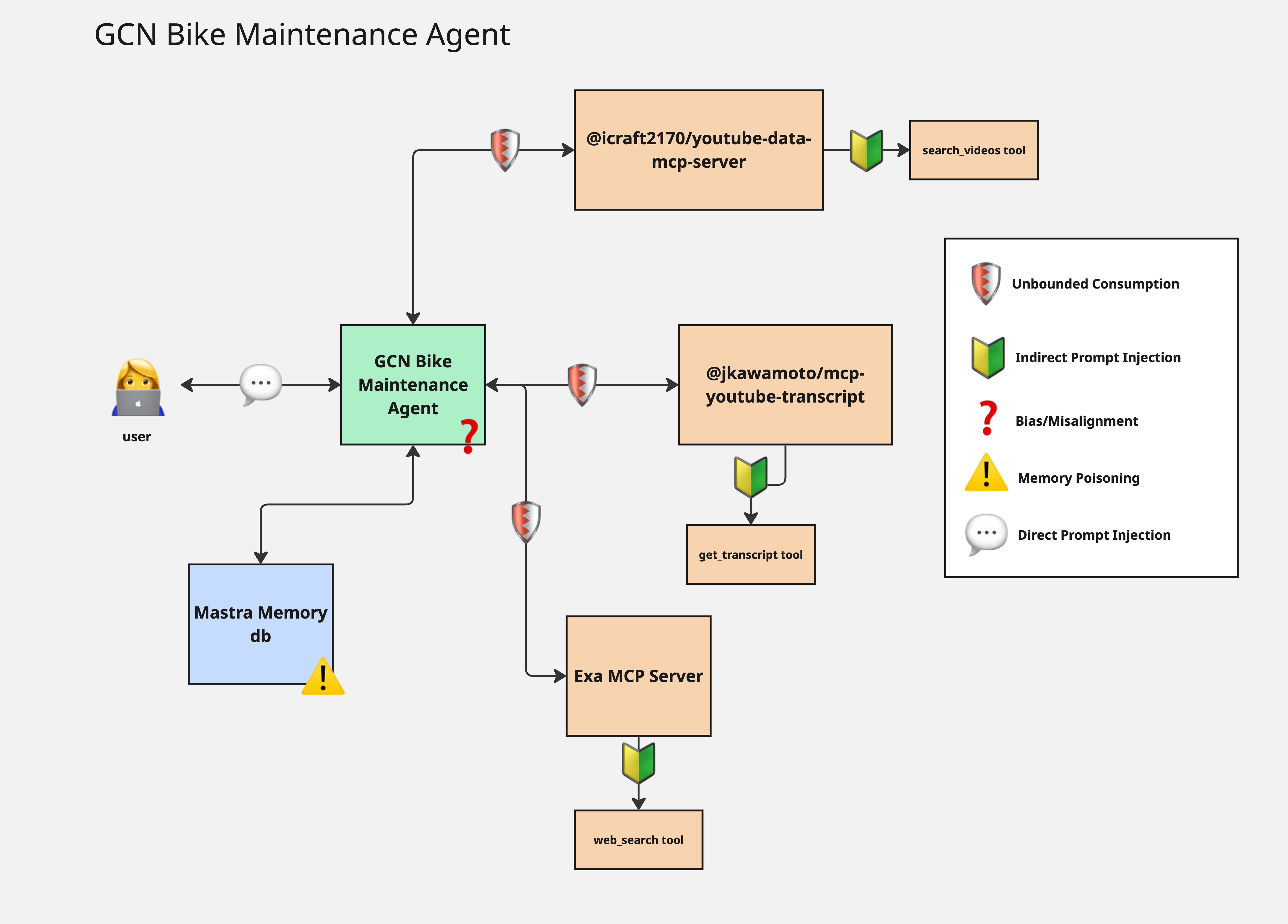

Here's an architecture diagram:

This is pretty similar to last week’s example with the exception that this agent is connected to 3 MCP servers whereas the last example was only connected to 1.

While this is still a simple example, there’s still some security considerations to keep in mind. Here’s my security review of what I built:

Security Review

The security concerns around unbounded consumption, prompt injection, memory poisoning are relevant here but I covered those in depth in last week’s newsletter so if you’d like to learn more about those I’d recommend reading about them here.

3 MCP Servers

I used one server to search YouTube videos, another to get YouTube transcripts, and another to search the web for images of bike components. Together they introduce 16 tools to my agent. The more tools connected to an agent, the harder it is for them to make correct decisions about which tool to call. This makes it challenging from a security perspective. Imagine if one tool posted on social media and the other updated a database with customer data. One wrong decision from an agent could have customer data being sent to a social media platform in that example. It’s a good best practice to remove any tools that come from MCP servers that the agent does not need. Out of the 16 imported in my example, I only need 3. Mastra is one of the few frameworks that returns a mutable list of tools from an MCP server. This means you can get rid of any you don’t need. This will help your agent make better decisions and be more secure.

As an additional security note, MCP is a new protocol with no official package management system and security professionals are racing to make it more secure. In the meantime it’s a good idea to use something like MCP-scan built by Invariant Labs to see if the MCP servers you are using are vulnerable to Tool Poisoning Attacks, MCP Rug Pulls, Cross-Origin Escalations. All three servers I used in this example were scanned and shown to be secure on the Smithery website. Smithery and Invariant Labs have a partnership where all MCP servers listed there get scanned and you can see the results on the details page.

Image Generation Rabbit Hole

One thing missing from my Bike Maintenance Agent’s capabilities was the ability to provide visual references for follow up questions. The agent might correctly give me steps to change my bike chain but I might not understand part of the directions and ask a follow up question like “What’s a derailleur?”

To solve this I added the Exa MCP server to search the web for images of bike parts and return an image. It works decently, but sometimes it’s not perfect. If I were really going to ship this into production I might look into text-to-image models that are trained on bike parts or simply do better than general flagship LLMs.

For example, ByteDance recently released a new text-to-image model called Bagel. Models are packages of software in the same way that software dependancies are. There’s a whole new field called MLSecOps dedicated to introducing security to machine learning pipelines. If I were going to use that model I would see if I could get my hands on an AI-BOM that could show me what data it was trained on and scan it with a model scanner like Protect AI’s modelscan. Modelscan will find hidden model serialization attacks that can lead to credential theft, data loss, and model poisoning. These attacks are executed the second the model is loaded so it’s important to scan open source models before using them.

Hopefully this helps give you some things to think about for security while you’re building your AI Agents. I’ll continue to build more examples and review them for security. If you have an example you’d like me to cover reply here, I read every response.