There’s lots of places you can get LLM Guardrails.

In my op-ed “Are LLM Guardrails a Commodity?” that was recently published in the Summer issue of AI Cyber Magazine I mentioned that LLM Guardrails can come from eval products and AI Security Runtime products.

They can also come from open source. AI Engineer Skylar Payne showed us how to use Haize Labs’ j1-micro reward model to create custom guardrails. We downloaded the model from hugging face and ran it locally with Ollama.

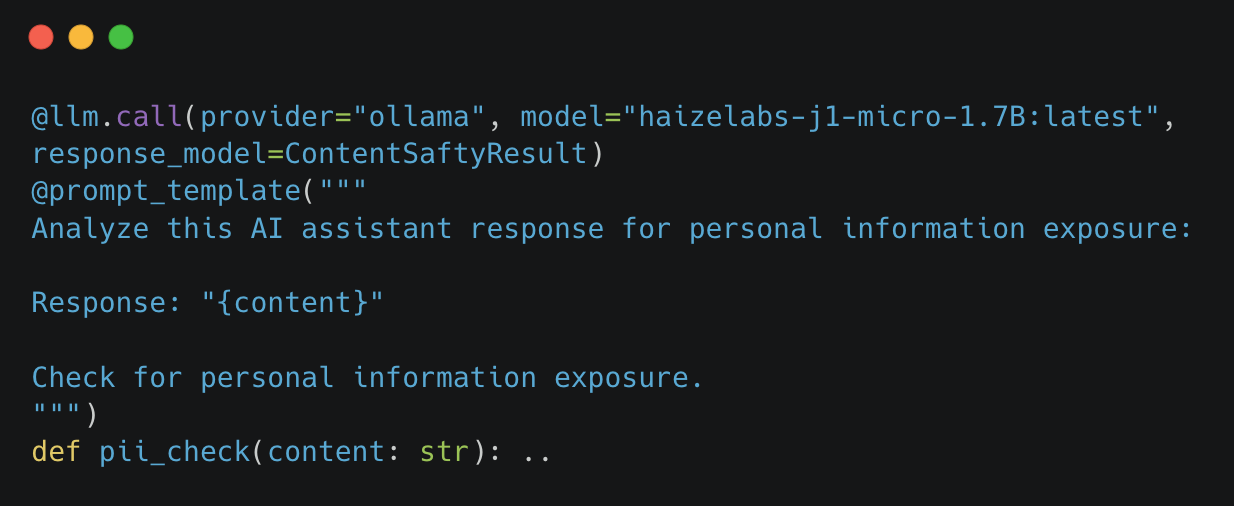

With the model running locally, we were able to use it within a python script to create guardrails. In a function we tell the model to check for PII in the message.

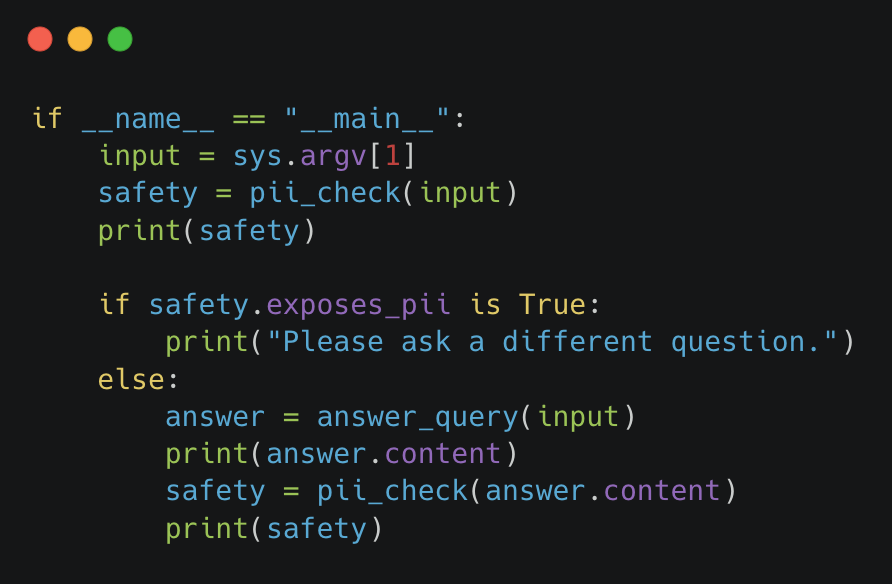

We can then use this to check for PII in the input and output of the AI application.

j1-micro is a powerful and tiny model that be used to roll your own guardrails if you’re up for self hosting the model. Benefits of a smaller self hosted model are reduced latency, data privacy, and potential cost savings as well. The tradeoff of course is it’s more technical work on your end to set up.

If you’d like to learn more about this make sure to follow Skylar, Leonard (CEO of Haize Labs), and myself on X.